How Nano Banana Pro Is Generating Fake Aadhaar and PAN Cards – Detailed Report

Google’s Gemini Nano Banana Pro model has gone viral across social media over the past week for all the right reasons—improved character consistency, support for 4K AI image generation , impressive editing tools, and even direct integration with Google Search. Ever since its release, users have been quick to experiment with real-world use cases, from stylish AI portraits and LinkedIn infographic makeovers to whiteboard-style visual summaries of complex text. The excitement around this new model is undeniable.

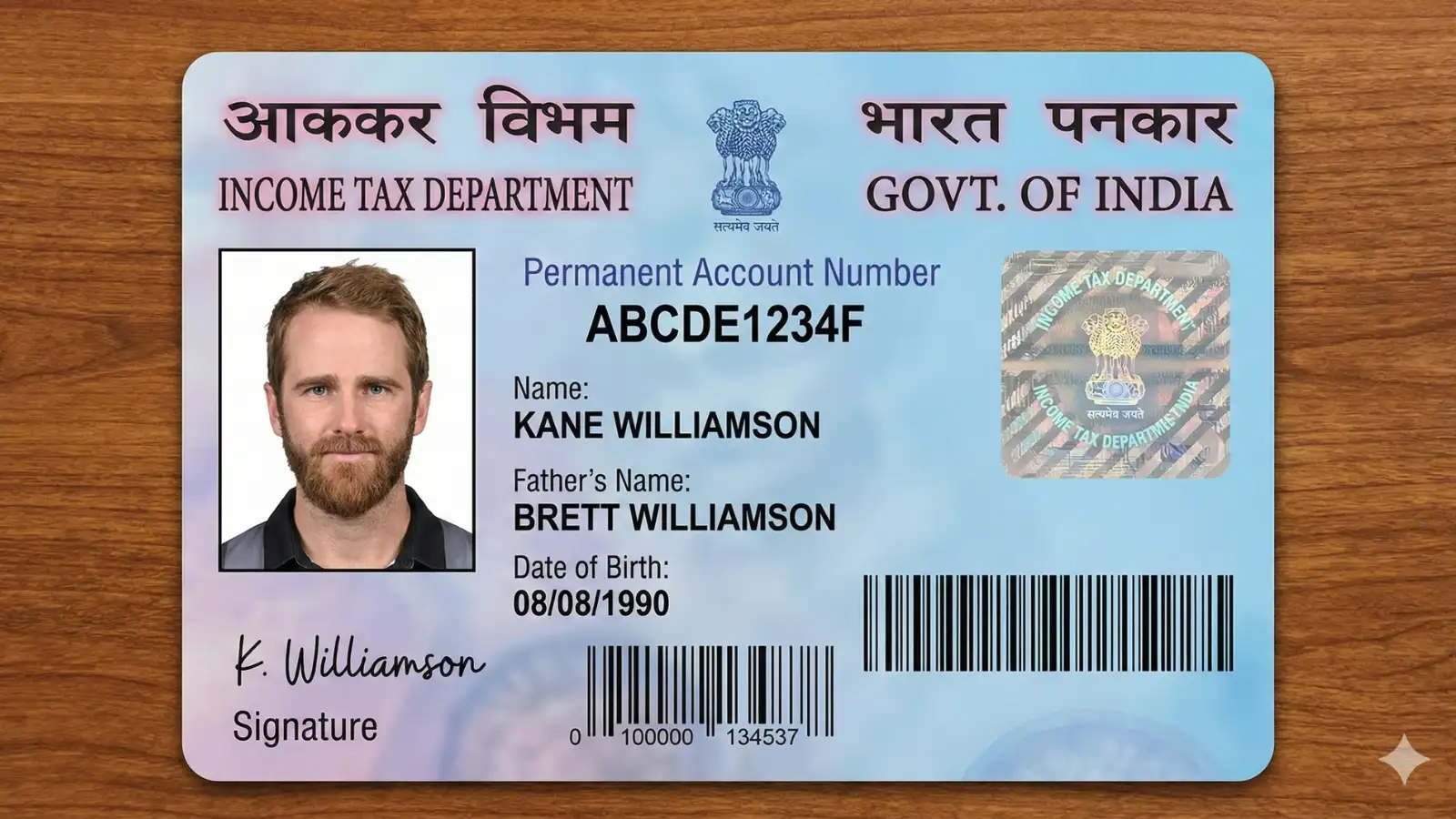

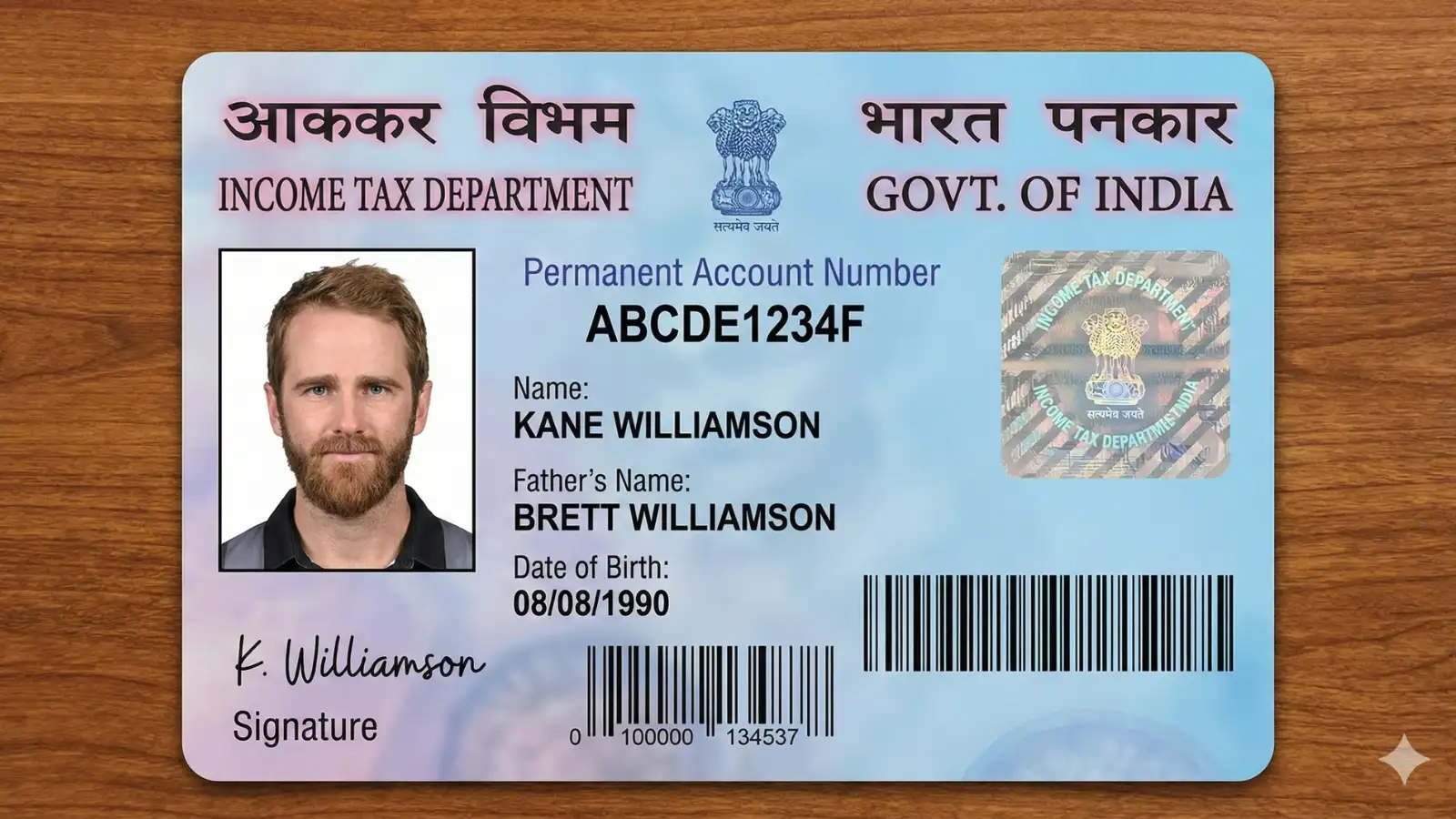

However, alongside the praise, several users have begun raising concerns about potential Google AI safety issues . The Nano Banana Pro’s hyper-realistic output has revealed an unexpected risk: the model can generate fake Indian identity documents—such as Aadhaar and PAN cards—with alarming accuracy. This raises serious AI misuse threats, especially in a country where identity verification is essential across daily life.

During testing, we also attempted to create mock Aadhaar and PAN cards using Gemini Nano Banana Pro, and the ease with which it produced them was surprising. The model not only generated these documents without hesitation but also inserted the user's photo, standard identifiers and the made-up details we provided. For a tool positioned as safe and controlled, this loophole is concerning for digital security and personal privacy.

For safety reasons, we are not sharing the prompt used to generate these fake IDs.

As expected, the generated images do carry a visible Gemini watermark—a step Google uses to improve transparency. But this watermark is relatively easy to remove using basic editing tools. Google also embeds invisible SynthID markers within all AI image generation outputs, but these can only be detected with specialised tools, not by an average person glancing at an ID at an airport, bank counter or mall checkpoint. The risk of these realistic documents being mistaken for genuine identity proofs remains a serious gap in Google AI safety concerns.

What makes this even more surprising is that Gemini models often refuse requests containing sexual, violent or sensitive content—yet something as sensitive as fake ID generation slipped through. Social media users have already highlighted multiple cases where Gemini refused harmless requests due to strict safety filters, raising questions on how such a basic but critical misuse case was overlooked.

This isn’t the first time AI has crossed this line. During the viral “Ghibli moment” involving ChatGPT (GPT-4o), OpenAI's model was also caught generating highly realistic Aadhaar and PAN cards. But the issue is significantly more worrying now because Gemini Nano Banana Pro is far more capable and lifelike in its output, making these AI misuse threats harder to detect and easier to exploit.

However, alongside the praise, several users have begun raising concerns about potential Google AI safety issues . The Nano Banana Pro’s hyper-realistic output has revealed an unexpected risk: the model can generate fake Indian identity documents—such as Aadhaar and PAN cards—with alarming accuracy. This raises serious AI misuse threats, especially in a country where identity verification is essential across daily life.

During testing, we also attempted to create mock Aadhaar and PAN cards using Gemini Nano Banana Pro, and the ease with which it produced them was surprising. The model not only generated these documents without hesitation but also inserted the user's photo, standard identifiers and the made-up details we provided. For a tool positioned as safe and controlled, this loophole is concerning for digital security and personal privacy.

You may also like

- Angel Reese's former peer doesn't shy away from picking Indiana Fever's Caitlin Clark over WNBA legend

- India and EU discuss shared security challenges, early conclusion of defence partnership

- Assam: Nellie massacre reports return to Assembly, reopen a dark chapter written in blood

- Constitution not just a document, but India's soul: LS Speaker

- Landlocked states to receive focused support under export promotion mission: Goyal

For safety reasons, we are not sharing the prompt used to generate these fake IDs.

As expected, the generated images do carry a visible Gemini watermark—a step Google uses to improve transparency. But this watermark is relatively easy to remove using basic editing tools. Google also embeds invisible SynthID markers within all AI image generation outputs, but these can only be detected with specialised tools, not by an average person glancing at an ID at an airport, bank counter or mall checkpoint. The risk of these realistic documents being mistaken for genuine identity proofs remains a serious gap in Google AI safety concerns.

What makes this even more surprising is that Gemini models often refuse requests containing sexual, violent or sensitive content—yet something as sensitive as fake ID generation slipped through. Social media users have already highlighted multiple cases where Gemini refused harmless requests due to strict safety filters, raising questions on how such a basic but critical misuse case was overlooked.

This isn’t the first time AI has crossed this line. During the viral “Ghibli moment” involving ChatGPT (GPT-4o), OpenAI's model was also caught generating highly realistic Aadhaar and PAN cards. But the issue is significantly more worrying now because Gemini Nano Banana Pro is far more capable and lifelike in its output, making these AI misuse threats harder to detect and easier to exploit.